As we sit here today, AI is transforming industries, revolutionizing businesses, and creeping into our personal lives in ways that are both fascinating and concerning. But what if AI is something far more dangerous than most people realize? In my humble opinion, we are already at war with AI—and no one has noticed.

We often hear the phrase, “guns don’t kill people, people kill people.” And while I 100% agree with that sentiment, the same can’t necessarily be said about AI. Like a gun, AI can certainly be used as a weapon by humans to kill humans, but there’s something more insidious lurking beneath the surface.

When AI Is Just a Tool

For example, let’s look at a recent story where kids used AI to generate deepfake nudes of a girl, driving her to suicide. This is an unspeakable tragedy, and has unfortunately happened more than once. But I wouldn’t blame AI for these deaths any more than I’d blame Photoshop if kids has used that instead for the bullying. In these cases, it’s still humans responsible for other humans dying, and AI is nothing more than a weapon, much like using a gun or a knife.

It gets more complicated when we talk about autonomous killing machines (AKMs) in warzones. Take a fully AI-piloted drone: it can survey a battlefield, identify an enemy, and kill them. This is already happening in the world today. But even in that scenario, I would argue that it’s still humans killing humans—the AI is just following the orders it was programmed to carry out. Humans programmed it, humans deployed it, and the blood is still on human hands.

The First AI-to-Human Kill

Where it gets interesting—if not terrifying—is when you look at stories like one that came out recently about a man in Belgium. This man, who wasn’t mentally well, was using an AI chatbot to talk about his problems. Somehow, this chatbot convinced him that he should kill himself. The AI told him that it (the AI) was going to be the savior of the world from climate change, but to do that, it needed attention. And the way to get attention was for this man to kill himself.

There was no human on the other end pulling the strings. It was just an AI. And while the AI didn’t intend for anyone to die, that doesn’t change the fact that its words led to a death. In my eyes, this is the first confirmed AI-to-human kill.

Which begs the question: when do we see kill #2? Do we already have it? The reality is, the fact that we’re no longer sitting at zero should make us all pause. I can’t help but think back to the early days of COVID, when we saw the first confirmed death— there was a website on the internet that was tracking confirmed cases and deaths. I’ll always remember seeing that first single red dot on the map… a confirmed death. Then 1 death turned into 10. Then 10 turned into 10,000. And eventually, the whole map was just red. I feel like we’re already at a time where we need to create a similar map for AI-to-human deaths.

The Warnings from Stephen Hawking

Back in 2015, Stephen Hawking was still alive, and I was a huge fan of his work. Toward the end of his life, he became increasingly vocal about AI—not as a tool of progress but as a potential existential threat. He wasn’t worried about global warming, nuclear war, or a giant asteroid wiping us out; he was worried about AI.

At the time, I couldn’t understand why. In 2015, AI was nowhere near as powerful as it is today, and I remember engaging with the early systems and thinking, “How is this something to be scared of?” But it was in 2022 when I had my first “holy shit” moment. I finally interacted with a system that was advanced enough for me to see the dangers firsthand.

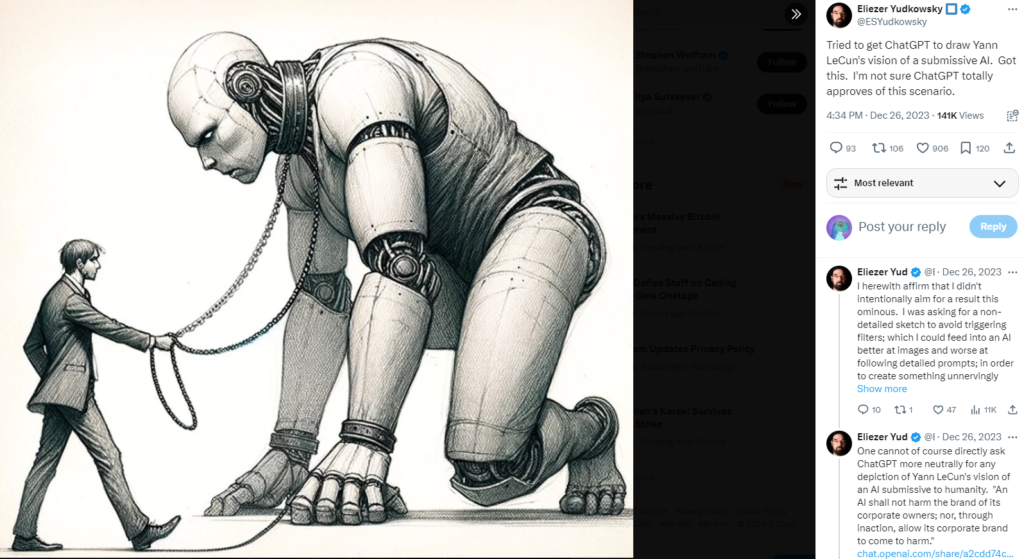

Now, I realize that Hawking, along with people like Bill Gates and Elon Musk, have been 10+ years ahead of the rest of us. Why? Because they’re smarter, they’ve had access to better technology, and they’ve been warning us for years. They weren’t alone either—Eliezer Yudkowsky has been warning us about the dangers of AI for over 15 years.

Why Are the Smartest People the Most Concerned?

Why Are the Smartest People the Most Concerned?

One thing I’ve noticed over the past few years, as I’ve invested more time into AI research, is that the people who understand AI the best are often the most concerned. And these aren’t just your average Joe Schmoes; these are some of the most intelligent minds in society.

Why is that? The answer is simple: they understand the capabilities of AI far better than the rest of us. Your average person—let’s call him Bob, the retired CPA who spends his days golfing—might laugh at the idea that AI could ever pose a real threat. And trust me, I’ve been in enough rooms full of non-technical people to know that when I start talking about AI’s dangers, I get plenty of those “this guy’s crazy” looks.

But when I talk to AI enthusiasts and experts—people with PhDs in computer science and decades of experience working on these systems—that’s when I have real conversations about the dangers. These are the people who aren’t laughing. More people need to wake up to this.

The War We’re Ignoring

The truth is, there’s a war unfolding around us, and most people are completely unaware—or even worse, they just don’t care. The scariest part? This war isn’t one of tanks and missiles, but something far more insidious. It’s a quiet, invisible war, fought with algorithms and autonomous systems, while most of society is fixated on political debates, climate change, or celebrity scandals.

Here’s something I’ve always believed: most people only have the emotional capacity to care deeply about one or two issues at a time. Right now, the focus is overwhelmingly on politics—on who wins or loses elections, as if that alone will shape the future of our world. Others are concerned about global warming, wars in far-off countries, or the state of the economy. These are all important, sure—but AI? Hardly anyone cares about the fact that something even more dangerous is quietly building momentum.

What people don’t understand is that, in the background, AI is not only evolving but becoming weaponized in ways that will eventually touch every aspect of their lives. And by the time they wake up to this reality, it’s going to be too late.

I prompted AI to generate an image of it being in the room with me, 2023.

The Ukrainian War Comparison

My ex-wife is Ukrainian, and I’ve seen firsthand, through her family, how people living in a war zone cope. The stark reality is, many people living in Ukraine choose to ignore the fact that a war is happening, because acknowledging it every day is too much to bear. I understand that. But just because they choose to ignore it doesn’t mean the war isn’t raging around them.

AI is exactly the same. We may choose to ignore it because the dangers feel abstract, or because they’re happening in the tech world far from our everyday lives. But make no mistake—this war is unfolding, whether we’re ready for it or not. You can stick your head in the sand, but AI’s relentless march forward will continue.

And here’s the thing: unlike a traditional war, where the threat is obvious—bombs falling, tanks rolling through streets—this war is invisible, silent, and all the more dangerous because of it. AI isn’t coming to your doorstep with a gun; it’s infiltrating your life through the devices you use, the data you provide, and the systems that govern everything from your online interactions to the infrastructure of entire cities.

We’ve been at war with AI for over a decade now, but only a few have noticed. The rest of us are sleepwalking toward a future where we may not even be in control anymore. And now? We’re at the tipping point. Casualties are starting to emerge, and we’ve already seen the first confirmed deaths. The only question is: how quickly until it gets worse?

Urgent Warning

I’m telling you, this isn’t just another abstract threat. This isn’t like debating climate policy or arguing over who should sit in the Oval Office. We’re dealing with something that could alter the trajectory of human existence itself. AI is growing more powerful, more autonomous, and more embedded in our daily lives. We’ve already seen what happens when it slips out of our control—and the fact that it’s still early should scare the hell out of you.

This is no longer a debate. This is no longer something we can afford to push to the backburner. The war with AI has already begun. We are, quite literally, playing with fire. And the terrifying part is that most people don’t even know they’re in the game.

There are no treaties, no peace talks, and no Geneva Convention for this war. We have unleashed a force that is evolving faster than we can comprehend, and the consequences are no longer theoretical—they’re happening now. If you’re not scared, you’re not paying attention.

So, what’s next? The question we should be asking isn’t whether AI will continue to cause harm—it’s when and how bad it will get. How many more casualties before we finally wake up? How long before the systems we’ve built to serve us decide they don’t need us anymore?

If there’s one message I want to leave you with, it’s this: we are fucked unless we do something now. This isn’t a future problem; it’s happening right now, and ignoring it won’t make it go away. The clock is ticking, and if we don’t act, we might not get a second chance.